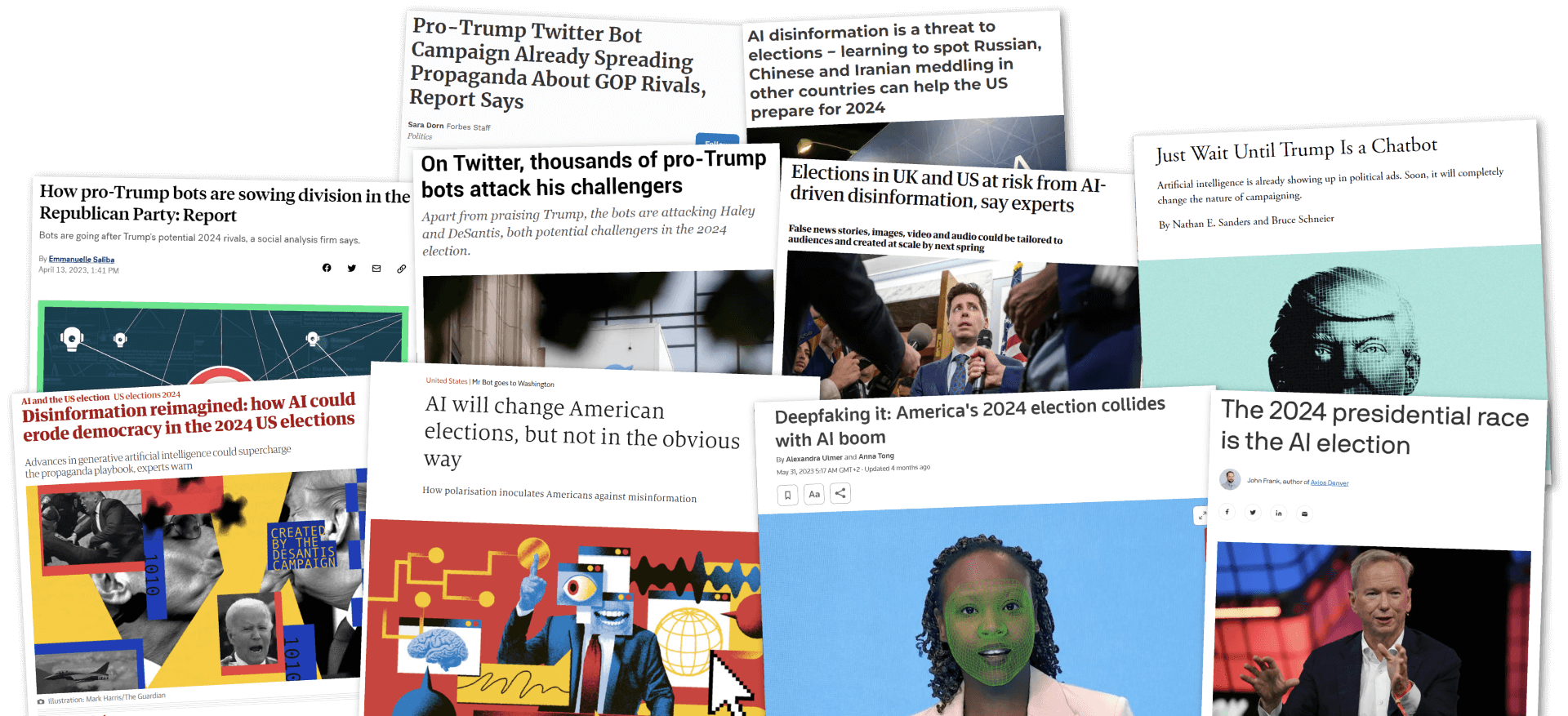

DOES YOUR CAMPAIGN HAVE AN ANTI-BOT STRATEGY?

Genuine political discourse is in danger of being drowned out by the overwhelming noise generated by bots and trolls. Legitimate candidates may find it exceedingly challenging to counter the barrage of disinformation, and voters will struggle to distinguish fact from fiction. The public’s trust in the electoral system could be severely eroded, leading to widespread disillusionment and apathy. In a worst case scenario, bots could sway the election outcome in favor of a candidate who does not genuinely represent the will of the people.

In light of this, ensuring an effective defense against these tactics is crucial to safeguarding the integrity of the democratic process and to foster public trust in the electoral system.

What Chenope Technology Can Do for Campaigns

Detect

Detect instances of inauthentic coordinated activity against your candidate that is not properly labeled as being associated with a political organization.

Detect instances of individual accounts that who are not what or who they claim.

Visualize

Visualize trends and individual instances of collusion and inauthenticity on an interactive digital platform in a clear and accessible manner for the voting public.

Combat

Actively combat instances of coordination in the forums on which they occur through use of a personalised avatar that is calling out accounts and educating the public. Trusted voters can play an active role by reporting suspicious behavior to the avatar.